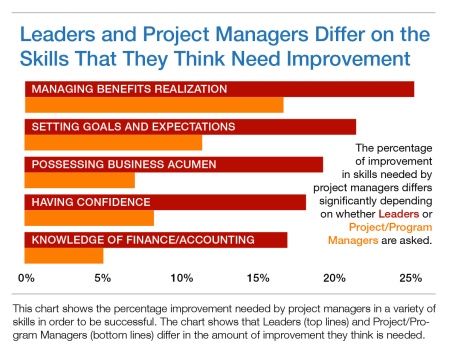

My last post noted that filling gaps, improving skill mastery, and driving behavior change are the improvements that organizations need. But how can you design these objectives into your talent improvement program? If you have had a program in place, how do you know you have the right mix? And how do you measure its impact on the organization?

Who are the truly competent initiative leaders in your organization? And how do you know?

Any competency improvement plan starts with identifying what the “truly competent” project or program manager looks like for the particular organization. We intuitively know that more competence pays for itself. And there is strong evidence for that intuition: it’s in our Building Project Manager Competency white paper (request here). But lasting improvement will only come from a structured and sustained competency improvement program. That structure has to begin with an assessment of the existing competency. Furthermore, the program must include clear measures of business value, so that every improvement in competence can be linked to improvements in key business measures.

My experience with such programs is that PMO and talent management groups approach the process in a way that muddles cause and effect. For example, a training program is often paired with PMO set-up. Fair enough. However, if the training design is put into place without a baseline of the current competence of your initiative leaders, then that design may perpetuate key skill or behavior gaps among your staff. You may hit the target, but a scattershot strategy leans heavily on luck.

In addition, this approach will leave you guessing about which part of your training had business impact. You may see better business outcomes, but not have any better idea about which improved skills and behaviors drove them. Even worse, if your “hope-based” design and delivery is followed by little improvement, then your own initiative may well be doomed.

So how should you fix your program, or get it right from the start? We at PM College lay out a structured, five-step process for working through your competency improvement program.

- Define Roles and Competencies

- Assess Competencies

- Establish a Professional Development Program with Career Paths

- Execute Training Program

- Measure Competency and Project Delivery Outcomes Before and After Training

These steps were very useful for structuring my thinking, but they’re more of a checklist than a plan. For example, my PMOs almost always had something to work with in Steps 1 and 3. Even if I didn’t directly own roles and career paths, I had credibility and influence with my colleagues in human resources. However, the condition of the training program was more of a mixed bag. Sometimes I would have something in place, sometimes I was starting “greenfield.”

The current state of the training program informs how I look at these steps.

- Training program in place: My approach is to jump straight to Step 5, and drive for a competency and outcome assessment based on what went before. I assume steps 1-4 as completed – even if not explicitly – and position the assessment as something that validates the effectiveness of what came before. In other words, this strategy is a forcing function that stresses the whole competence program, without starting anew.

- No training program in place: I use the formal assessment to drive change. As PMO head I have been able to use its results to explicitly drive the training program’s design. More significantly, these results are proof points driving better role and career path designs, even if HR formally owns those choices.

PM College has a unique and holistic competency assessment methodology that looks at and assesses the knowledge, behaviors, and job performance across the project management roles in your organization. As always, if your organization would like discuss our approach, and how it drives improved project and business outcomes, please contact me or use the contact form below. We’d love to hear from you.

FYI: For more reading on competency-based management, check out Optimizing Human Capital with A Strategic Project Office.

Filed under: PMO | Tagged: capability building, Competency Assessments, Leadership, Professional Development, Project Management | Leave a comment »